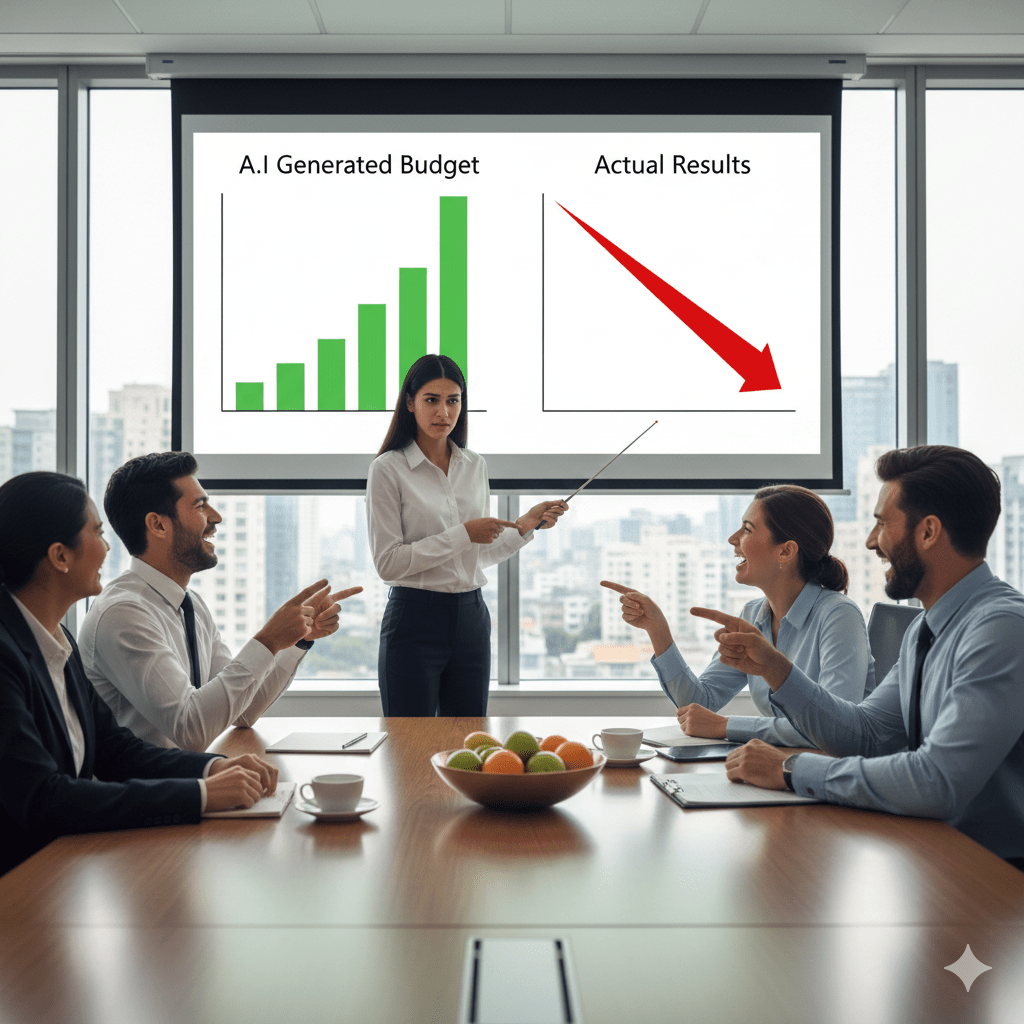

Can you spot the complex and focused systems error that is embedded in the illustration? Answer below.

During times of slow and modest changes in information technologies, corporate executives need not struggle to build their internal control systems. They can simply purchase the systems from organizations that have designed them to reflect existing governance standards.

At times of rapid progress, though, these vendors (and the standard setters that they follow) may not be able to keep pace with newly developing technologies. Thus, corporate executives may not be able to find appropriate systems of internal control that are available for purchase.

Nevertheless, insurance companies, investors, external auditors, regulators, and other parties always expect corporate executives to maintain effective internal control systems. But how can executives meet that expectation when such systems are not commercially available?

Their only option is to design their own in-house control standards and systems as best they can. Of course, they can always hire consultants to assist them, but they would still need to design their own assessment standards to evaluate their consultants’ activities.

This challenge is particularly onerous in very broad fields like Artificial Intelligence (AI), fields in which user errors and other risk factors can occur at many different levels of complexity. Consider, for instance, a researcher who uses a Large Language Model (LLM) to seek information. She may inadvertently prompt her AI platform to produce erroneous information for a variety of reasons.

At a complex level, for instance, she may fail to notice a subtle logic error in the AI input, an error that causes a significant AI hallucination. Conversely, at a far simpler level, she may simply forget to conclude a lengthy and detailed AI query with a brief synopsis of her need. In each instance, the AI system may thus be compelled to produce erroneous output.

How should corporate executives address such broadly defined sets of risks in their self-designed control systems? An effective approach, consistent with the COSO models of internal control and risk management, involves the definition of a hierarchy of risk factors across a full range of complexity levels. After defining the complexity levels and risk factors, organizations can design controls that address the highest priority risk factor(s) at each level.

For instance, an organization may believe that the simplest and broadest risk factor during the composing of LLM queries is the user’s ignorance of basic grammar and spelling concepts. A slightly more complex and focused level of risk may be the user’s ignorance of general business vocabulary.

A higher level of complexity and focus regarding risk may be the user’s ignorance of advanced technical business vocabulary. And the two most complex and focused levels may be the user’s inexperience in applying such vocabulary to AI tasks, followed by his or her inexperience in incorporating large data sets into the tasks.

Can you see how each level of risk represents a more complex and focused extension of the previous level? This hierarchical structure is consistent with the COSO models. By understanding how each level influences (and, in fact, partly determines) the next one, we can more effectively design control systems that minimize overall risk across the multiple levels.

Eventually, of course, the pace of technological change will lessen, and control system designers will keep pace with AI technologies. Inevitably, systems will then be developed and made available for purchase by corporate executives.

Until that time, though, organizations will continue to develop their own in-house control systems. Fortunately, by adopting an effectively structured approach, organizations should be able to succeed at this task.

Image Caption Note: Google Gemini created this illustration regarding an AI control system for producing budgets, one that (erroneously) generates output that fails to bear any resemblance to actual results. Can you also spot a more complex and focused systems error that is embedded in the illustration? All of the colleagues who are sitting at the conference table are twins! Clearly, the user’s AI prompts and queries failed to anticipate or prevent this error.