Are you aware that your A.I. queries provide documentary evidence of your practice of “due professional care” in researching business information? If you utilize an electronic platform with A.I. technology to search for business data, you could be held legally responsible for relying on inappropriate information that is generated by flawed queries.

Let’s review an example or two of a flawed query. On June 19, 2025, I submitted the following query to Google Gemini: Please create an image of a person who is conducting his banking business at a very well managed community bank with strong internal controls. Gemini replied: Here is an image of a person conducting banking business at a well-managed community bank:

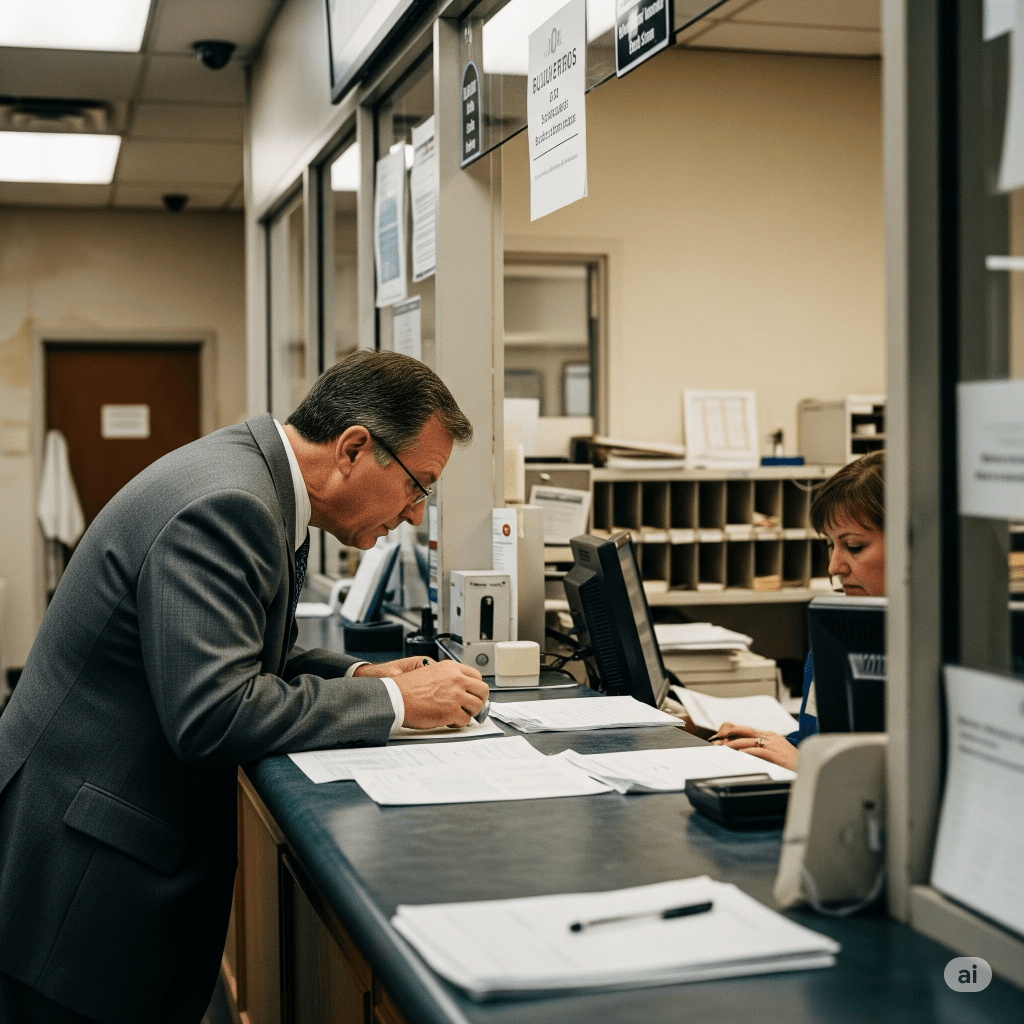

Then I initiated a new chat and submitted the following query: Please create an image of a person who is conducting his banking business at a very poorly managed community bank with weak internal controls. Gemini replied: Here is the image you requested:

Can you see the implicit variation? It’s not an easy task. Indeed, at first glance, both images appear to present a community bank that is serving a customer’s needs. And neither is employing a clearly visible set of particularly strong (or weak) internal controls.

But look more closely. In comparison to the well-managed bank, the poorly managed institution uses far more paper to process transactions. The customer is informed of business procedures via a cluttered array of temporary signs. Even the technology in the poorly managed bank is inferior, as evidenced by the obsolete “chunkiness” of the computer screens.

Those factors do not, however, represent terribly weak internal controls in community banks. After all, it is certainly feasible to effectively manage a paper-based business with temporary signs and relatively old technology. And the common factors that normally differentiate strong control environments from weak control environments, such as a visible security presence and enclosures that protect the privacy of shared financial information, do not exist in either image.

There’s also a subtle bias that is embedded in the images. The well managed bank features younger individuals with friendly facial expressions who wear more stylish clothes. The poorly managed bank features older individuals with neutral facial expressions who are less stylish in appearance.

Those are not distinctions involving internal controls. They are marketing images that pervade the internet. And yet Gemini offers them as differential factors that illustrate relatively strong or weak management conditions.

These are two visual examples of the types of queries that may produce undesirable implicit variation in A.I. output. Because they lack specific information, the queries can be “red flagged” as deficient by auditors as falling short of the minimum standards of “due professional care.”

What specific information? Co-founder and current President of OpenAI (the firm that owns ChatGPT) Greg Brockman uses layperson’s language to define what Inc. called the basic structure of the perfect AI prompt. To exercise an appropriate degree of “due professional care,” all firms should integrate such guidance with other supplemental material to develop their own policies and procedures for defining A.I. queries.

End Note: Many thanks to my colleague Alan White of CU Accelerator and the Association of Credit Union Audit and Risk Professionals for their invitation to present this information at the ACUARP’s 35th Annual Insight Summit in San Antonio TX next week.